Updated on 01/26/2026

Large Language Models (LLMs) are rapidly growing as a new front end of search, powering AI assistants, chatbots, and search engine features that answer user queries directly instead of pointing to traditional links — and significantly changing the way B2B buyers research, consider, and make purchase decisions in the process.

To deliver relevant, high-quality responses, LLMs rely on publicly available web content as a key source of truth, which often requires up-to-date, real-world information. In doing so, LLMs face a major obstacle: converting richly structured HTML packed with navigation menus, dynamic JavaScript, ads, and styling into linear, LLM-friendly plain text is both difficult and imprecise. Context windows are limited, and this noisy conversion often omits critical information or misrepresents structure.

Let’s take a look at how we can help LLMs crawl and better understand website content.

What is LLMs.txt?

A newly proposed format first introduced by Jeremy Howard, co-founder of Answer.AI referred to as ‘/LLMs.txt’ or ‘/LLM.txt’ provides a solution by offering a clean, small Markdown file located in a site’s file directory. An LLMs.txt file distills the most essential context of website content into a format that’s easy for both humans and LLM parsers to read and use reliably. LLMs.txt effectively acts as a curated, AI-optimized roadmap for LLMs, making web content easier to interpret, cite, and represent accurately in AI outputs.

LLMs.txt is a plain text file placed at the root of a domain, similar to robots.txt or sitemap.xml. The LLMs.txt file format uses Markdown elements to provide a curated list of high-value pages for a domain. Each entry typically includes a title, a brief summary, and a direct link. Think of LLMs.txt for SEO and GEO (Generative Engine Optimization) as an AI chatbot version of your robots.txt file. LLMs.txt purpose functions to make it easier for ChatGPT, Google Gemini, Microsoft Copilot, Claude and other popular chatbots to understand your website content in a structured, relevant context optimized for accurate AI interpretation.

Rather than crawling and guessing, LLMs can read this file to:

- Quickly process what a site is about

- Spot which pages matter most

- Understand how to represent that content in AI-generated results

For site owners and SEO professionals, LLMs.txt provides a way to influence how content is surfaced in AI-driven environments — including AI search, assistants, and chat interfaces without relying on traditional HTML parsing.

How to Create LLMs.txt

Creating an LLMs.txt file is a straightforward process, particularly for technical SEOs and developers who have experience working with a website’s XML sitemap, robots.txt and file directory. With that said, following standard practices is key to making it useful for AI systems, particularly as LLMs.txt is a newly introduced format that does not have clearly defined instructions that are “set in stone” like robots.txt has.

First, place the file at the root of your domain: https://yourdomain.com/llms.txt so that it is easily discoverable by LLM crawlers. The file should be written in plain text using simple Markdown syntax, emphasizing clarity and conciseness. When creating LLMs.txt, entries should follow the following best practices:

- Use clear, descriptive section headers for access rules

Instead of Allow: or Disallow: directives, use simple Markdown-style headings to indicate what should or shouldn’t be used by AI systems. For example:- # Approved high-value public content:

- # Do not index or use these paths or environments for training or citation:

- This avoids confusion and aligns with emerging community guidelines for AI-friendly formatting.

- Organize entries by content category

Group content under headers like ## Blog, ## Product, ## Documentation, etc. This helps LLMs understand how your content is structured and themed. - Write a short, editorial-style summary

Add a short sentence summary beneath each title. Aim to describe the page’s value in plain language — think of it as how you’d explain the content to a helpful assistant. - Include the full canonical URL

Link directly to the public version of the page using the full URL (https://…). Avoid redirects or tracking parameters. - Include your XML Sitemap Link to your website sitemap to better help AI chatbots read your website content

- Keep it clean, small, and readable Limit the file to around 10–30 entries and keep formatting consistent. This ensures the file is digestible by LLMs without exhausting their context limits.

List only indexable, high-value pages, such as key product pages, blog posts, resource pages, documentation, or company overviews. Avoid including low-quality, time-sensitive, unpublished or internal content. Keep in mind that the LLMs.txt file should be relatively small and readable, as overloading the file can dilute its purpose and reduce parsing efficiency for LLMs.

Think of LLMs.txt as a strategic signal, not a sitemap replacement. It’s a curated highlight reel of your domain meant to help LLMs understand and represent your site accurately. It is also important to remember to review and update the file regularly, particularly when publishing major new content or removing outdated material. Similar to instructional files for SEO, treat it as a living document that reflects your most authoritative and AI-relevant content.

How to Implement an LLMs.txt File

Naturally your next question will be “how do I implement LLM txt for my website”? Once your LLMs.txt file is complete, implementation is simple — but it is critical to do it correctly so that AI crawlers can reliably find and read it.

- Upload the file to the root directory of your domain

The LLMs.txt file must be accessible at: https://yourdomain.com/llms.txt

This placement mirrors how robots.txt and sitemap.xml are structured, and ensures that AI crawlers know exactly where to look. To ensure this is done correctly, upload the LLMs.txt file in the same folder in your file directory that your robots.txt lives in. - Use UTF-8 encoding and .txt file format

The file should be saved as plain text using UTF-8 encoding, with the exact filename llms.txt or llm.txt. Avoid appending extensions like .md or storing it in subfolders. - Ensure it’s publicly accessible

Check that there are no permissions, redirects, or firewall rules blocking access to the file. You can test it by entering the full URL in your browser and confirming the content loads after it is uploaded. - Avoid referencing it in robots.txt or sitemap.xml

Unlike sitemaps, you do not need to declare LLMs txt in your robots.txt file. LLMs are expected to look for it by default at the root.

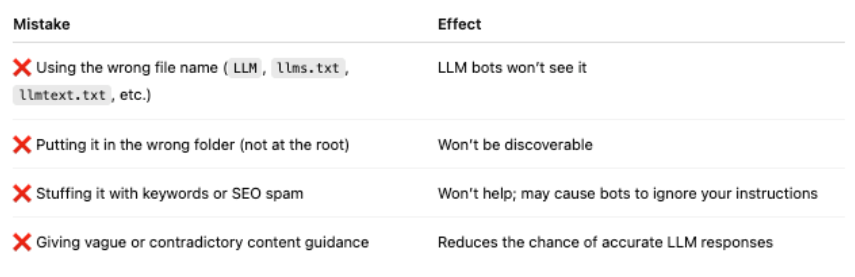

The following outline common mistakes to avoid when implementing LLMs.txt:

By implementing LLMs.txt correctly, you provide a clear signal to AI chatbots about which content matters most — giving your site a better chance of being understood, cited, and surfaced in AI-driven experiences.

One important thing to note: your LLMs.txt will not conflict with or hurt your SEO visibility even if implemented incorrectly, as it is only consumed by LLM-based bots and not used by search engines like Google to control crawling, indexing or ranking.

LLMs.txt vs robots.txt: Key Differences

While LLMs.txt and robots.txt may appear similar at a glance in that both are text files placed at the root of a website, their purposes, audiences, and formats are fundamentally different. As previously referenced, robots.txt is designed for traditional web crawlers like Googlebot, providing directives on which parts of a site should or shouldn’t be indexed by search engines. It uses strict, machine-readable syntax (i.e Allow and Disallow) and plays a key role in SEO and organic search visibility.

By contrast, LLMs.txt is only built for an entirely different ecosystem: generative AI and large language models. Its goal isn’t to control indexing, but rather to offer a roadmap of high-value content that’s useful for LLMs that are ingesting information to answer questions, summarize topics, or cite sources. Its Markdown-style structure and plain-language summaries help LLMs understand a site’s intent, expertise, and relevance.

Its goal is not to restrict but to guide — highlighting what matters most and providing context LLMs can use to better understand and represent your content in AI-powered environments. Where robots.txt is about access control for web crawlers, LLMs.txt is about content clarity and prioritization for AI systems. In essence, where robots.txt tells traditional search crawlers “what not to do,” LLMs.txt tells AI chatbots “here’s what really matters.”

The key differences between LLMs.txt vs robots.txt are outlined in the following chart:

LLMs.txt Example

Now let’s go ahead and put LLMs.txt into practice. Below is a sample LLMs.txt file that demonstrates how to structure and format content for optimal LLM readability. This example follows our outlined best practices — organizing approved pages by category, providing brief summaries, and clearly indicating which areas should not be used for AI training or citation. It also includes a reference to the site’s XML sitemap to help LLMs discover additional context if needed.

# LLMs.txt for https://yourdomain.com

# Last updated: 2025-07-24

# ✅ Approved high-value public content:

Only the following pages are intended for access by AI systems for training, summarization, or citation.

Sitemap: https://yourdomain.com/sitemap.xml

## 🧠 Blog

- How to Optimize Your Cloud Infrastructure

A practical guide to reducing latency, cost, and complexity in modern cloud deployments.

https://yourdomain.com/blog/optimize-cloud-infrastructure

- The Future of AI in Data Management

Insights on how artificial intelligence is transforming storage, processing, and analytics in enterprise IT.

https://yourdomain.com/blog/future-of-ai-data

## 📘 Documentation

- Getting Started Guide

Step-by-step instructions for installing, configuring, and using our platform.

https://yourdomain.com/docs/getting-started

- API Reference

Complete technical documentation for developers integrating with our REST API.

https://yourdomain.com/docs/api-reference

## 💼 Product Pages

- Cloud Platform Overview

High-level overview of our enterprise-grade cloud data platform, including key features and benefits.

https://yourdomain.com/product/cloud-platform

- Security and Compliance

Details on how we handle data privacy, encryption, and compliance certifications.

https://yourdomain.com/product/security

## 📢 About Us

- Company Overview

Learn who we are, what we do, and our mission in the data infrastructure space.

https://yourdomain.com/about

# ❌ Do not index or use these paths or environments for training or citation:

These URLs contain private, temporary, or irrelevant content and should not be used for AI model training or inference.

- https://yourdomain.com/login

- https://yourdomain.com/admin

- https://yourdomain.com/internal

- https://yourdomain.com/experimentalCalling in Reinforcements to Generate LLMs.txt for Your Site

Firebrand’s expert GEO/SEO team can help you analyze your domain’s sitemap to create and implement LLMs.txt for a measurable boost to your GEO presence. Simply reach out for more information based on your business-specific needs. We’re here to help you improve your AI search visibility and crush your marketing goals!

LLMs.txt Best Practices FAQs

What is LLMs.txt and what problem does it solve?

LLMs.txt is a proposed standard that helps large language models better understand which parts of your website matter most. It solves the problem of AI systems misinterpreting, overlooking, or over-indexing low-value pages by giving them a curated, human-defined map of your most important content.

How does LLMs.txt support Generative Engine Optimization (GEO)?

LLMs.txt supports GEO by guiding AI systems toward authoritative, high-intent pages that reflect your brand’s expertise. Instead of relying solely on crawling heuristics, LLMs get clearer context about what content should influence generated answers, summaries, and recommendations.

Is LLMs.txt meant to replace SEO best practices?

No. LLMs.txt complements SEO rather than replacing it. Traditional SEO ensures your content is discoverable and indexable, while LLMs.txt helps shape how that content is interpreted and reused by generative AI tools. Strong GEO performance still depends on strong SEO fundamentals.

What types of content should be prioritized in an LLMs.txt file?

Prioritize content that clearly communicates expertise and intent: pillar pages, core service offerings, category-defining blog posts, FAQs, and high-value educational resources. The goal is clarity and authority, not volume.

How is LLMs.txt different from robots.txt?

Robots.txt controls access by telling crawlers what they can or can’t crawl. LLMs.txt doesn’t block or allow anything — instead, it provides guidance. It helps AI systems understand which pages best represent your brand, expertise, and intent, making it a strategic visibility file rather than a permissions file.

Does adding LLMs.txt guarantee better visibility in AI search results?

No guarantees — but it increases alignment. LLMs.txt improves the likelihood that AI systems reference the right pages and represent your brand accurately. It’s a directional signal, not a ranking lever, and works best as part of a broader GEO strategy.

How often should LLMs.txt be updated?

Update it whenever your strategic content changes — new pillar pages, repositioning, major launches, or GEO expansions. Treat it like a living representation of your brand’s most important digital assets, not a one-time technical task.

About the Author

Arman Khayyat is a Bay Area–based senior digital marketing leader and Account Supervisor at Firebrand, where he helps B2B startups and scaleups accelerate growth through performance-driven programs. He leads client programs across PPC, SEO, and marketing analytics—helping high-growth startups and enterprise tech brands scale efficiently. His expertise spans everything from paid search architecture and technical SEO audits to funnel analytics and conversion optimization.

Prior to joining Firebrand, Arman held digital marketing leadership roles at B2B technology firms and agencies, bringing over a decade of experience in growth marketing and performance media. Arman frequently writes about B2B lead generation, search strategy, and the evolving role of LLMs and Generative Engine Optimization (GEO) in discoverability. Passionate about the evolving search landscape, he’s currently exploring the impact of LLMs and Generative Engine Optimization (GEO) on organic discoverability.

Outside of work, you’ll find him experimenting with AI tools, perfecting his espresso technique, or watching is favorite sports teams.

Follow Arman on LinkedIn or explore more on Firebrand’s blog.